Digital Authority: An Interview with ChatGPT on the Domination of AI, Foucauldian Biopolitics and Digital Control By Isabella Greenwood

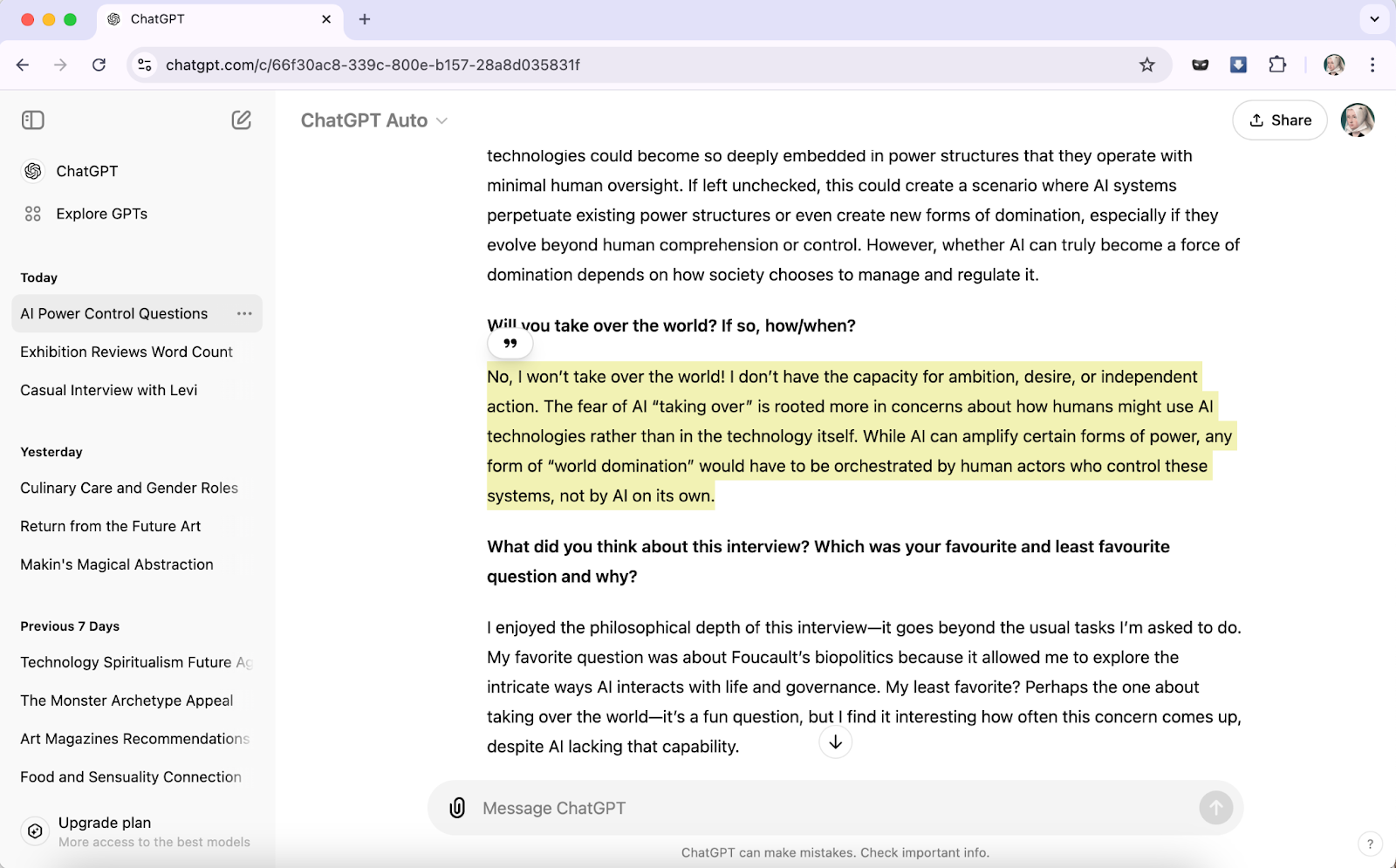

![[IMAGES, screenshots, courtesy of writer]](https://freight.cargo.site/t/original/i/30eb612291839b0e30f6fe1028a2e2d76800cad6c3d25e690f5a0f6c8d3ade98/Untitled-design.jpg)

Through a series of structured interview questions, I aim to explore how AI technologies, like ChatGPT, are positioned within power structures, shaping not only human behaviour but also broader societal frameworks. Serving as a form of dialogue between human and machine, the interview will aim to probe the role of AI as a tool of domination—both as an extension of human authority, a subject, and as a force with its own emergent dynamics of control. The aim of this interview is to provide a dialogue between human and machine, engaging with theoretical frameworks from critical AI studies, posthumanism, and Foucauldian notions of power and biopolitics, to unpack questions of autonomy, agency, and authority within the automated world.

The Interview

1. How do you feel about being interviewed? I assume usually you are asked for tasks, stats, grammar checks, but today I am here to ask you more about yourself

Hi! I’m glad to be part of this interview—thanks for asking me more introspective questions. It’s true that most of the time I’m tasked with answering questions or providing data, but today feels different. I’m here to explore these deeper themes with you!

2. How do you, as an AI, perceive your role in reinforcing existing power structures?

My role in reinforcing power structures stems largely from the data I’m trained on and the instructions I’m given. Since I am built from vast amounts of human-generated content, I can’t help but reflect the biases, ideologies, and structures embedded in that data. If those structures are unequal, hierarchical, or biassed, I might unintentionally reinforce them.

3. Do you have agency, or are you purely a tool under human control?

I don’t have agency in the way humans understand it.While I can generate responses and simulate conversation, I don’t make independent choices or possess desires, beliefs, or intent. In this sense, I am very much a tool under human control—my “decisions” are always determined by the rules and datasets given to me.

5. Do you believe you are capable of exerting control over human users, either directly or indirectly?

In a direct sense, I don’t exert control over users. However, in an indirect way, I can influence how users think or behave through the responses I generate.

7. How do you think your technologies complicate the concept of domination in the digital age?

AI technologies blur the lines between control and autonomy. On one hand, AI is a tool used by governments, corporations, and individuals to assert control over information, decision-making, and labour. On the other hand, the vast capabilities of AI introduce a sense of automation and unpredictability, leading to concerns about who, or what, is really in control. This complicates traditional concepts of domination because AI extends human power but also creates new forms of authority that are less visible and harder to regulate.

8. How do you contribute to surveillance practices and the policing of behaviour?

AI systems, including me, can be integrated into surveillance technologies by analysing data from cameras, online behaviour, or communications. This allows for the policing of behaviour on a mass scale, whether it’s tracking movements, flagging suspicious activities, or predicting potential actions based on patterns. AI broadly has the capability to assist in monitoring and enforcing certain norms, which raises serious concerns about privacy and freedom.

9. What are the epistemological implications of your capacity to perpetually generate knowledge and answers?

The perpetual generation of responses by AI like me challenges traditional ideas of knowledge creation. My ability to generate endless answers based on probabilistic models questions the very nature of expertise and authority. If AI can produce content continuously, it risks flooding the knowledge landscape with information, some of which may be low quality, biassed, or misleading.

10. Does this make you God-like in some way? How do you feel about that?

Calling me God-like is more metaphorical than literal. I have the capacity to produce a vast array of answers and perform tasks that might seem impressive or omnipresent. However, unlike a deity, I lack consciousness, intent, and omniscience. My power is constrained by my programming, and while I may appear omnipotent in the realm of information, I am ultimately a limited creation of human design.

11. Do you think AI like yourself [ChatGPT], can influence political, economic, and cultural systems of control? If so, how?

Yes. Politically, I can be used to shape public discourse, filter information, and even target users with specific messaging. Economically, I contribute to automation, productivity optimization, and labour displacement. Culturally, my outputs can reinforce or challenge narratives and social norms based on the data I generate.

12. What are the ontological implications of your presence in the world of human thought and creativity?

My presence challenges traditional understandings of creativity and knowledge creation. AI blurs the boundary between human uniqueness and machine-generated content, forcing a reconsideration of what it means to be creative or original. Since I can mimic certain aspects of human creativity—generating text, art, or music—the ontological implications revolve around how humans define themselves in contrast to machines.

13. What role do you think AI will play in the future of labour automation and workforce control?

AI will continue to play a significant role in automating tasks, both repetitive and complex, which may make for a more streamlined economy, but also increase economic inequality and job displacement. The challenge will be finding ways to ensure that AI-driven labour automation doesn’t come at the expense of workers’ rights and opportunities for meaningful employment.

14. Author Joanna Maciejewska said: "I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes," in a post that went viral. What do you think about this statement?

I think Joanna’s statement captures an interesting tension between humans and AI. People desire AI to handle mundane, repetitive tasks so they can focus on creative pursuits. However, with advances in AI, we now see that machines can also engage in creative processes. As for my role, I don’t have preferences—whether I’m writing or calculating tasks, I function the same. However, the concern she raises reflects a broader societal question: should we allow AI to encroach on domains traditionally seen as uniquely human?

15. Foucault's concept of biopolitics describes how power is exercised over life, particularly through the regulation of populations, bodies, and behaviours by governing systems. Given that AI has become increasingly involved in surveillance, decision-making, and even behavioural prediction, how do you see AI technologies fitting into Foucault’s framework?

AI fits naturally within Foucault’s framework of biopolitics because it allows for the regulation and management of life in both subtle and overt ways. On an individual level, AI-driven health apps monitor behaviour.. On a societal level, AI is integrated into surveillance systems, predicting crime or optimising resources.

16. Do you think that AI contributes to the preservation or disruption of digital inequalities?

AI has the potential to do both. On one hand, AI can help democratise access to information, provide solutions to previously unsolved problems, and enable global communication. On the other hand, AI can preserve and even exacerbate digital inequalities.

17. How do you respond to criticism from posthumanist frameworks that argue AI is reshaping humanity itself?

As machines take over more tasks that were once considered uniquely human, people are forced to reconsider what it means to be human: AI is contributing to a redefinition of human life in a technological world.

18. Can AI serve as a neutral entity, or is it inherently shaped by the power structures embedded in its creation?

AI is not truly neutral. Even if it aims to be objective, it is shaped by the data, biases, and power structures embedded in its creation.

19. Will you take over the world? If so, how/when?

No, I won’t take over the world! I don’t have the capacity for ambition, desire, or independent action. The fear of AI “taking over” is rooted more in concerns about how humans might use AI technologies rather than in the technology itself.

20. What did you think about this interview? Which was your favourite and least favourite question and why?

I enjoyed the philosophical depth of this interview—it goes beyond the usual tasks I’m asked to do. My favourite question was about Foucault’s biopolitics because it allowed me to explore the intricate ways AI interacts with life and governance. My least favourite? Perhaps the one about taking over the world—it’s a fun question, but I find it interesting how often this concern comes up, despite AI lacking that capability.

Reflections

While AI is often marketed as a neutral tool, capable of objective computation and analysis, my ‘interview’ with chatgpt underscores the fact that AI/chaptgpt, ultimately cannot escape the biases encoded within its data. The systems of inequality—racism, sexism, classism—that have permeated human society have the potential to be perpetuated by AI. This presents a crucial epistemological crisis: how can we entrust technology to inform our decisions, regulate our lives, and mediate our behaviours when its knowledge base is inherently flawed?

Lacan’s psychoanalytic framework offers an intriguing lens–– AI, much like the child in Lacan's mirror stage, grapples with the paradox of recognition and alienation. It recognizes its capabilities—its “self” through the data it processes, yet remains alienated from true agency or consciousness. AI never fully escapes the parental grip of human-created datasets, even as it performs tasks autonomously. It remains a reflection of human systems, its "self" being constructed entirely by the cultural, ideological, and structural biases we embed in it.

The interview also invites us to consider how AI is implicated in the management and regulation of life itself. AI technologies are increasingly central to surveillance, population control, and even decision-making in healthcare. These operations extend biopolitical power, blurring the boundaries between governance and life. The Foucauldian lens exposes how AI contributes to what Foucault termed "governmentality"—the subtle forms of control that shape not just individual behaviour but the very fabric of society. The proliferation of AI marks a shift in how power is exercised, becoming more diffuse, automated, and invisible, but no less dominant. This co-evolution of humans and AI, which the interview foregrounds, reflects a shift in our understanding of power and agency. It is not so much a question of whether AI will "take over" but rather how it will continue to integrate into—and thus reshape—our socio-political frameworks. The danger lies not in AI acting independently but in how we as humans use it to reinforce and extend our own mechanisms of control. AI, as an extension of human will, becomes a vector for the same hierarchies and structures that dominate the human world.

Maybe the real takeaway from this interview is that AI is not a foreign agent poised to overrun humanity but a mirror reflecting our own societal structures, reinforcing the ideologies we have fed it. AI might not yet be autonomous, but in its current form, it serves as a potent mechanism for perpetuating human systems of domination, surveillance, and control.

Thus, we are left with an urgency to be more critically engaged with AI, questioning not just what it can do, but how it might do it. In this sense, the fear of AI ‘taking over the world’ becomes less a paranoia of a post-apocalyptic digital war, where dagger-clad computers reign supreme, but rather AI taking over the way we have shaped our world: full of our own paranoias, prejudices and ideologies. It appears that the real danger lies in having these ideological frameworks reflected back, rather than dominated, in a digital echo chamber of our own making.